The Python API of MLX is very similar to that of NumPy, with a few exceptions. Additionally, MLX also offers a fully featured C++ API that closely follows the Python API. While both MLX and NumPy are array frameworks, there are several key differences between them.

MLX allows for the composition of function transformations for automatic differentiation, automatic vectorization, and computation graph optimization, offering a more flexible approach to mathematical operations.

But unlike NumPy, computations in MLX are lazy, meaning they are only carried out when necessary, which can lead to efficiency improvements.

MLX also offers multi-device compatibility, allowing operations to run on any supported devices, such as CPU and GPU, providing a more versatile platform for machine learning operations.

Just in time for the holidays, we are releasing some new software today from Apple machine learning research.

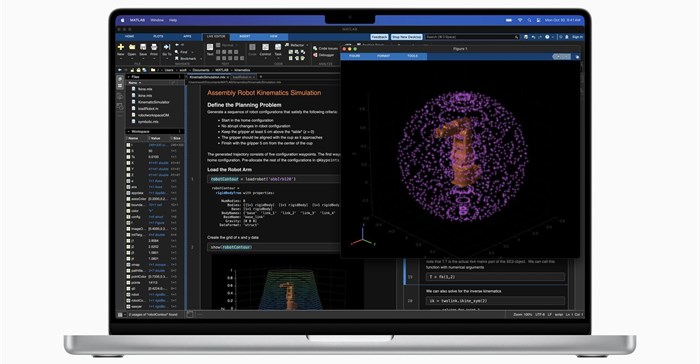

MLX is an efficient machine learning framework specifically designed for Apple silicon (i.e. your laptop!)

Code: https://t.co/Kbis7IrP80

Docs: https://t.co/CUQb80HGut

— Awni Hannun (@awnihannun) December 5, 2023

This design draws inspiration from other frameworks like PyTorch, Jax, and ArrayFire. However, a notable difference between these frameworks and MLX is the unified memory model - which is a unique characteristic of Apple's ARM-based M-series SoCs.

In MLX, arrays exist in shared memory, allowing operations on MLX arrays to be performed on any of the supported device types without the need for data copies. Currently, MLX supports device types like the CPU and GPU.

Apple's MLX release comes in the wake of other AI announcements from its biggest competitors like Google, Microsoft and Amazon.

Last week IBM and Amazon Web Services (AWS) announced an expansion of their partnership, aiming to help more clients operationalise and derive value from generative AI. As part of this expansion, IBM Consulting is set to deepen and expand its generative AI expertise on AWS, with a goal of training 10,000 consultants by the end of 2024.

The two tech giants also plan to deliver joint solutions and services, upgraded with generative AI capabilities, designed to help clients across critical use cases. This move could potentially enhance the capabilities of Amazon’s AI assistant, Amazon Q, providing users with a more sophisticated and intuitive AI experience.

A key difference between Apple's approach is that developers seem limited to how the applications work on-device and Apple isn't sending data off to its cloud servers.